Politics, lifehacking, data mining, and a dash of the scientific method from an up-and-coming policy wonk.

Monday, January 31, 2011

QMD - A framework for prioritizing research ideas

Every research paper is a combination of three main parts: 1) a question, 2) a methodology, and 3) data. For instance, the Druckman paper [from this week's reading] asks 1) when elite framing can influence opinion using 2) an experiment where 3) college students read and discuss news articles. ...

(By the way, "data" includes any kinds of facts you'd use to build your argument, not just numbers. Surveys, content analysis, government statistics, experimental results, interviews, historical case studies, etc. are all data -- they're just analyzed differently.)

The combination of QMD that you choose largely determines how hard it will be to carry out the project, the kinds of conclusions you will be able to draw, and the threats to validity that critics can use against you. Choosing the QMD for a research project is like choosing a major in college -- in a lot of ways, all the later decisions are just details.

When you choose which Q, M, and D to use, you can either come up with something completely new, or borrow ideas from past studies. It is much, much harder to come up with new questions, methods and data than it is to borrow from past research. As a strong rule of thumb, you only want one of your three parts to be new. So if you're asking a new question, use familiar methods and easy-to-find data. (This isn't plagiarism because you're completely up front about the idea that you're building on previous work.)

Probably the easiest kind of study to pull off is a replication study, using new data with old methods and questions. For example, to deal with one of the threats to validity in Druckman et. al's study, you might do the same experiment with a representative sample of people, instead of just college students. Same question, same method, different data. This would still take work, but there aren't a lot of unknowns in the process, and anyone who believes Druckman and Nelson will probably believe your results as well. Replication studies are good for boosting ("Not only are Druckman and Nelson right about framing effects for college students -- they're right about all American adults as well!") or cutting back ("Druckman and Nelson's findings only apply to people under 30. Everyone else is much less susceptible to elite framing.") the scope of previous findings.

The next hardest is applying a new method to an old question. For example, you might ask when elite framing influences public opinion using a series of carefully timed surveys asking the same questions that Druckman et al used. Similar question, different method, similar data. This is a little harder, because you'd have to use different skills, build a new kind of argument, and deal with new threats to validity. On the plus side, different methods have different threats to validity, so if you use a new method, you can probably address some of the threats to validity that previous studies couldn't. For example, timed surveys could deal with the "recency effects" that Druckman can't do much about.

Introducing new questions is the hardest of all, for at least three reasons. First, you have to convince people that your question is worth asking. This is harder than it sounds. In my experience, people like to think they already understand the world pretty well, so they will resist the notion that you've found a blind spot in their worldview. Second, you have to be able to convince people that you're the first one to ask the question. Third, you have to build an entirely new line of evidence to defend your reasoning. With a one-semester deadline, I'd advise against trying to introduce a new question, just because it's so hard to do.

Anyway, that might be more than you want to know, but it's the way I decide what research projects to take on. QMD is my framework for figuring out what topics are in the overlap of "interesting" and "doable."

Thoughts?

Thursday, January 27, 2011

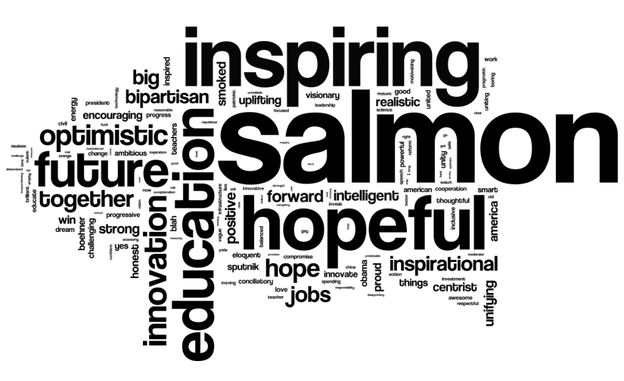

Inspiring, hopeful, education, and salmon -- Not in that order

Pennsylvania Policy Database Project

Wednesday, January 26, 2011

bubbl.us: A great little tool for flowcharts

Two days ago, I found this great little tool for flowcharts: bubbl.us. It's free, unless you want the premium license, and it has a really nice interface. Once you learn how to tab and shift-click and everything, you can sketch out complicated charts very quickly. If you allow it some room on your hard drive, you can even save maps between sessions. As I said, I'm using it primarily for mapping workflow, but I could see it being useful for brainstorming, simple org charts, or flow diagrams too.

And with that said, here's the map through the dissertation quagmire to the end of the semester.

Thursday, January 13, 2011

An Interview with Ronald Coase

RC: Nothing guarantees success. Given human fallibility, we are bound to make mistakes all the time.

WN: So the question is how we can learn from experiments at minimal cost. Or, how could we structure our economy and society in such a way that collective learning can be facilitated at a bearable price?

RC: That’s right. Hayek made a good point that knowledge was diffused in society and that made central planning impossible.

WN: The diffusion of knowledge creates another social problem: conflict between competing ideas. To my knowledge, only people fight for ideas (religious or ideological), only people are willing to die for their ideas. The animal world might be bloody and uncivilized. But animals, as far as we know, do not fight over ideas.

RC: That’s probably right. That’s why we need a market for ideas. Ideas can compete; people with different ideas do not need to slaughter each other.

Really interesting tension. I'm still working through what to make of it. Collectively, we're smarter because we disagree? And vice versa?Snipped here (Knowledge Problem just made my blogroll), with a hat tip to marginal revolution

Saturday, January 1, 2011

Optimal Poker AI -- I told you so!

I've thought back to that conversation several times, mainly because it was really frustrating for both of us. I had a proof backing me up -- Von Neuman's 80-year-old minimax theorem* -- an absolutely airtight mathematical proof, but I still couldn't convince him. The problem is that it's an existence proof, so it demonstrates that the strategy must exist, but doesn't tell you what the strategy is. And of course, arguing that "my math says there's an optimal strategy" rings pretty hollow when you can't answer the question, "so what's the strategy?"

So I was very happy to run across this paper and demo presenting the optimal strategy for Rhode Island Hold'em. Rhode Island poker is a stripped down version of Texas Hold'em (get it?). You play with a smaller deck, only one hole card, a one-card flop, and a one-card turn, but no river. There are a few limits on betting, but the game is essentially the same. According the abstract, "Some features of the [optimal strategy] include poker techniques such as bluffing, slow-playing, check-raising, and semi-bluffing."

Very nifty proof of concept.

* A little bit more on the math. The minmax theorem states that every one-on-one winner-take-all game has an optimal strategy profile. A quick FAQ on what it means to be an optimal strategy.

So what does it mean that the strategy is optimal?

The technical definition is that it's an equilibrium strategy. If both players are playing the optimal strategy, neither has an incentive to deviate and play anything else. As one of my profs would say, it's prediction proof. Even if we both know each others' strategies, neither of us has an incentive to deviate.

So this strategy always wins?

No. There is some luck in the game. But if you played it repeatedly, there's no way you would win more than half the time. Almost any non-optimal strategy you'd play, the computer would beat you more than half the time.

Wouldn't an "optimal strategy" be really predictable?

No. In poker, it's often useful to bluff and keep your opponent guessing, so the optimal strategy is partly random. That means that when facing a given situation (e.g. After the flop, I have X cards, I did Y pre-flop, and my opponent did Z) the computer will flip a weighted coin to make its decision (e.g. 3/4 of the time I fold, 1/8 of the time I raise, and 1/8 of the time I check.) So the strategy is perfectly well-defined, but still unpredictable at times.

Does it know how to play short-stack and long-stack poker?

I don't think so. As far as I can tell, Rhode Island poker is played one hand at a time, with limits on betting. So the optimal strategy doesn't need to know anything about stack length, escalating blinds, going all in, etc.

If you introduced those elements, you'd be playing a different game. According to the min-max theorem, there would still be an optimal strategy, but it would probably be at least a little different.