Brand sentiment showdown: "

There are many brands on Twitter that exist to uphold an image of the company they represent. As consumers, we can communicate with these accounts, voicing praise or displeasure (usually the latter). Using a simple sentiment classifier1, I scored feelings towards major brands from 0 (horrible) to 100 (excellent) once a day for five days.

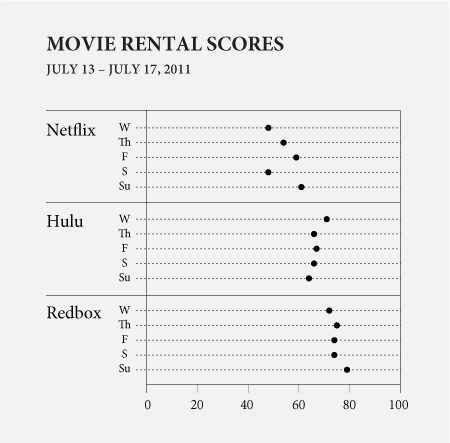

The above for example, shows scores for Netflix, Hulu, and Redbox. Netflix had the lowest scores, whereas Redbox had the highest. I suspect Netflix started low with people still upset over the price hike, but it got better the next couple of days. Then on Saturday, there was a score drop, which I'm guessing was from their downtime for most of Saturday. Hulu and Redbox, on the other hand, held more steady scores.

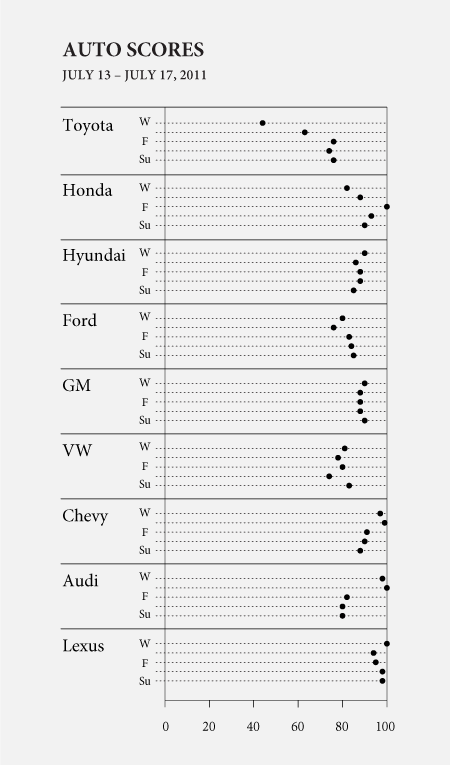

As for auto brands, Toyota clearly had the lowest scores. However, Lexus, which is actually a luxury vehicle division of Toyota had the highest scores in the high 90s to 100.

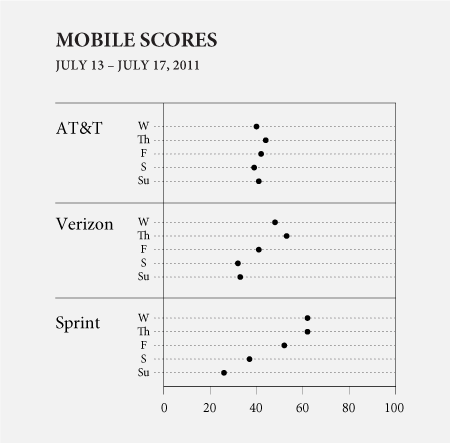

How about the major mobile phone companies, AT&T, Verizon, and Sprint? Verizon scored better initially, but had lower scores during the weekend. Not sure what was going on with Sprint.

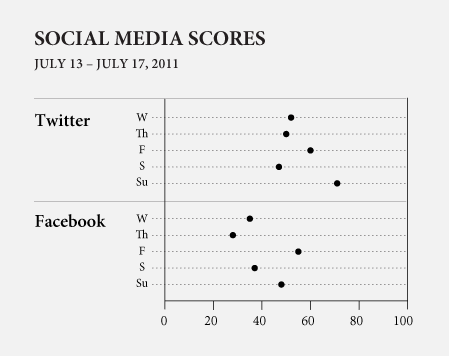

Between Twitter and Facebook, there was obviously some bias, but Twitter faired slightly better. Twitter scored lower than I expected, but it probably has to do with bug reports directed towards @twitter.

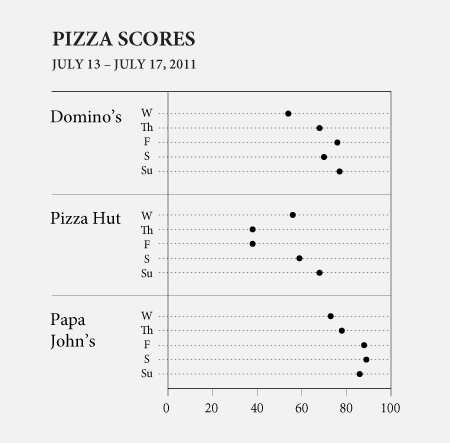

Is Domino's Pizza good now? Papa John's stayed fairly steady while Pizza Hut scores were sub-par.

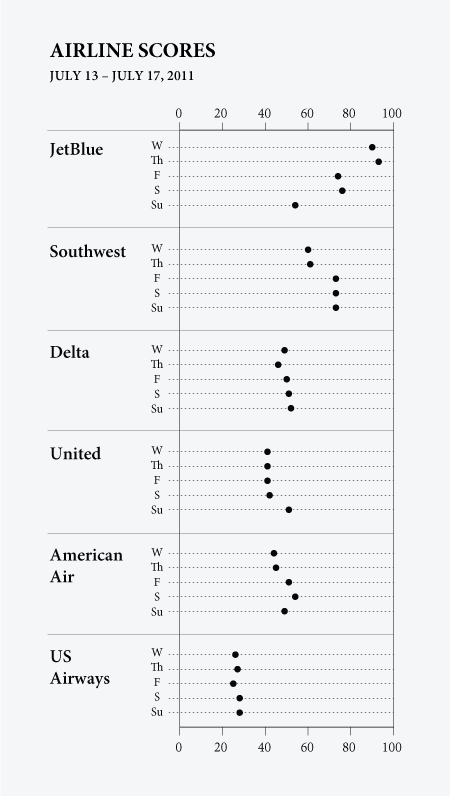

Finally, as a sanity check, I compared airlines like Breen did in his tutorial. Results were similar with JetBlue and Southwest clearly in the positive and the others picking up the rear.

Any of these scores seem surprising to you?

- Jeffrey Breen provides an easy-to-follow tutorial on Twitter sentiment in R. The scoring system is pretty basic. All you do is load tweets with a given search phrase, and then find all the 'good' words and 'bad' words. Good words give +1, and bad words give -1. Then a tweet is classified good or bad based on the total. Then to get a final score, only tweets with total of +2 or more or -2 or less are counted. The final score is computed by dividing number of negative tweets divided by total number of 'extreme' tweets. Obviously this won't pick up on sarcasm, but the scoring seems to still do a decent job. I wouldn't make any important business decisions based on these results though.

The new FlowingData book is available now.

No comments:

Post a Comment